Exploring Crawlab: Your New Enterprise Web Scraping Management Choice

Introduction

In the modern data-driven era, acquiring and managing online information has become crucial. To provide powerful support for enterprises and developers, Crawlab has emerged as an enterprise-level web scraping management platform characterized by being ready-to-use out of the box. Regardless of your team size, Crawlab can provide professional and efficient web scraping management solutions.

Core Features

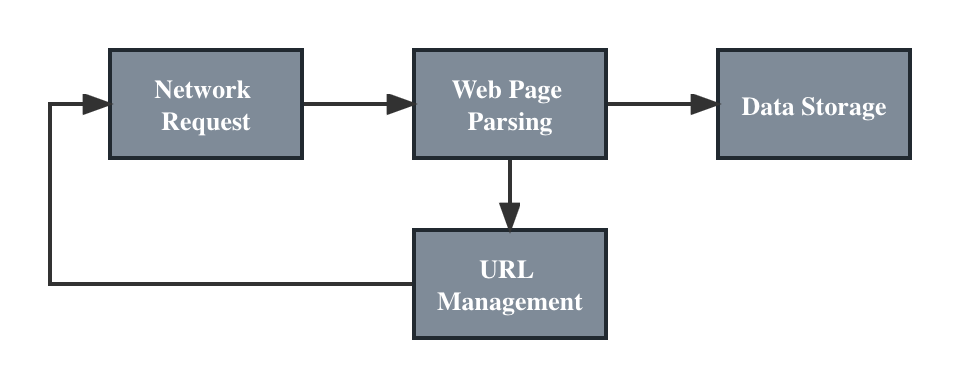

Crawlab's core features include distributed system management, spider task management and scheduling, file editing, message notifications, dependency management, Git integration, and performance monitoring, among others. Its distributed node management allows spider programs to run efficiently across multiple servers. No more worrying about manual uploading, monitoring, and deployment hassles - Crawlab automates all of this, ensuring you can easily schedule spider tasks and view spider program running status and task logs in real-time.